The 'surprisingly simple' arithmetic of smell

Jan 2022, phys.org

The age of artificial olfaction is upon us.

This is now the second report in the last few months that presents a computational model for the olfactory bulb, which is the biological supercomputer on your face that crushes gigtons of databytes per attosecond (slight exaggeration).

The last paper came from a physicist working on information theory (Tavoni et al at Penn State). Another paper the month prior, which came from none other than the lab that discovered olfactory receptors, found, again, a computational model, discovered via machine learning, that compresses the n-dimensionality of odorant sensory data.

But again, this new paper comes primarily from a department of electrical and systems engineering, in collaboration with the biomedical engineering department. I don't know everything that's going on, I only read the papers on the weekends, but that's a lot of papers about computing in olfaction, and from people who do not study olfaction exclusively.

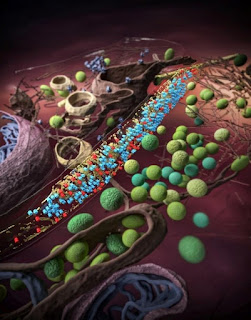

And this is at the level of the bulb. We're not talking about the DREAM project, where big-data's worth of words and molecules are processed by GPT-3 to predict the names of smells. This is about looking at the hardware. How in the world does that bulb, which compresses thousands of receptors, themselves receiving information from un-countable stimuli, into dozens of signals that go on to control the entire enormity of a mammalian body via its limbic system. The bulb is the choke point for this system, and it's using magic that we are only now beginning to understand to the point of copying it.

I doubt this is the earliest example, but as far back as 1991, scientists were talking about olfaction as a model system for computational neuroscience. These were neuroscientists and psychologists writing about this. But they could see the significance -- it's literally wired like the deep learning neural networks you hear about in the news (you know, powering the AI in your toaster, your tissue box and your alarm clock).

It really looks like we're getting the hang of this. They started with a simple question -- how come things smell the same to us, even in different contexts or environments? Like how a plaid shirt looks okay in your sunlit bedroom, but later looks like a shit sandwich in the fluorescent lights of your office (or remember the black-and-gold dress? maybe you're trying to forget).

If smells come from evaporating molecules, which are literally volatile, changing all the time based on environmental conditions, how come they always smell the same to us? Maybe olfaction would be a good model to investigate.

So they did, by pairing locusts with a training smell, under all kinds of different conditions, hungry, full, hot, cold, humid, dry. Every time, the locust recognized the training smell (with the locust equivalent of a salivating dog). Yet, "The neural responses were highly variable," one of the researchers said. Same molecule, same response, but completely different receptor patterns, every time. It just doesn't make sense.

Deep learning to the rescue (obviously). The algorithm found that it's the interaction of activating and inhibiting neurons; I'll copy the copy directly:

Finding the features you want is similar to the information conveyed by the ON neurons. Absence of deal breakers is similar to silencing of the OFF neurons. As long as enough ON neurons that are typically activated by an odorant have fired—and most OFF neurons have not—it would be a safe bet to predict that the locust will open its palps in anticipation of a grassy treat.

via the Department of Electrical and Systems Engineering and the Department of Biomedical Engineering, Washington University in St. Louis: Srinath Nizampatnam et al, Invariant odor recognition with ON–OFF neural ensembles, Proceedings of the National Academy of Sciences (2022). DOI: 10.1073/pnas.2023340118

And further reading:

via University of Pennsylvania: Gaia Tavoni et al, Cortical feedback and gating in odor discrimination and generalization, PLOS Computational Biology (2021). DOI: 10.1371/journal.pcbi.1009479

via Massachusetts Institute of Technology's McGovern Institute for Brain Research: Peter Y. Wang et al, Evolving the olfactory system with machine learning, Neuron (2021). DOI: 10.1016/j.neuron.2021.09.010.

via MIT: Davis J L & Eichenbaum H, eds. (1991). Olfaction: A Model System for Computational Neuroscience. Boston: Bradford Books/MIT Press.

Deep Nose, 2022

Signal to Noise for the Win, 2021

Olfatory Overload, 2021

Image credit: Inhibitory Synapse - TAO Changlu, LIU Yuntao, and BI Guoqiang; Image design: WANG Guoyan, MA Yanbing - 2021

No comments:

Post a Comment